#4 Furry Circuits

Cold emails to startups, mukbanging, the best todo app, dopamine prompting questions, and Pokémon-playing AIs

The way we interact with systems is changing.

Soon, I'll code, shop, and write essays using a simple chat interface. No more clicks, dashboards, or navigation bars. The chat input will fade away in time, allowing me to use my natural voice to interact with the system instead. A camera built into the screen will track my eye movements. It will drag, drop, and move windows based on where I look. There won't be a need for a keyboard anymore. Only a small clicking device I can hold between my index finger and thumb. I don't know if this is a good thing or a bad thing. I know it will take us time to reach our destination. We will create a lot of slop along the way.

Anyways, here are some links for the week ahead:

"Perhaps more important than what you should do in a cold email is what you shouldn’t do." This is a great guide on Making your [cold] email [to startups] 99 percent less bad. Reading through it, I found myself thinking, "Yep, definitely did that thing I shouldn't have," and "Oh, I should have included that." The framework is refreshingly simple. In short, your cold email should say: who you are, why you are reaching out, and why they should care. It shouldn't: be more than 200 words, use fancy words/abbreviations, be sent without an ask, be vague and be dishonest. The article includes helpful examples that illustrate each point—definitely worth reading the full breakdown.

I don't know why this is a thing, I don't know who started it, and I frankly don't want to know anything more about it. It's called mukbanging—wtf?

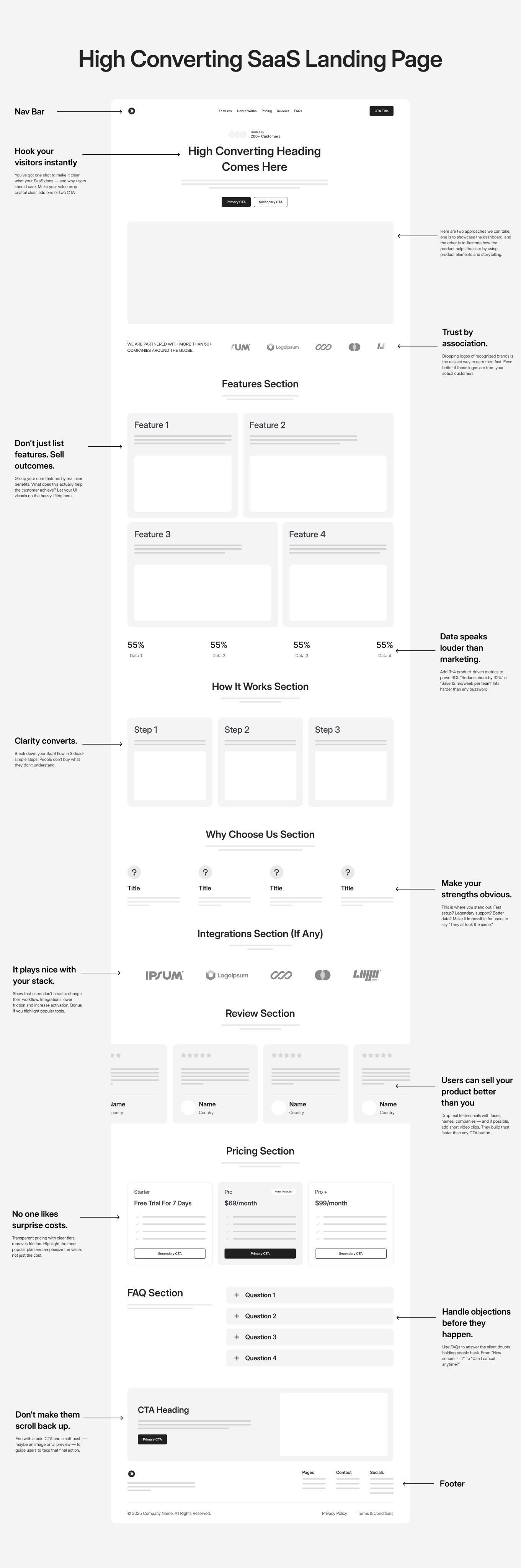

I've always struggled to structure a landing page for a website but I recently found a very effective cheatsheet. I've started to reference this cheatsheet for the landing page of my project tmplate.xyz.

This is huge for e-commerce—and a perfect example of that conversational future I mentioned earlier. Shopify Agents will change how people shop online via Shopify stores. Instead of clicking through product pages, filters, and checkout flows, you'll just talk to the store. Picture this conversation:

You: "Find me X car tool part and sort by cheapest"

AI: "Here you go"

You: "Now add the cheapest two to my cart and suggest which one is better to buy based on quality, seller, and product reviews"

AI: "I suggest you purchase option A, based on assumptions Y. Would you like to make this purchase?"

You: "Yes, make the purchase"

AI: "Everything is good to go, click confirm to make your purchase and we'll send you a confirmation email. Would you like me to remove option B from your cart?"

You: "Yes""I've tried them all. Notion, Todoist, Things 3, OmniFocus, Asana, Trello, Any.do, TickTick. I even built my own todo app once (spoiler: I never finished it). After years of productivity app hopping, I'm back to where I started: a plain text file called todo.txt." This is the exact thing that happens to me, except it's in a file named "Untitled" in Obsidian. As pointed out in the comments on HackerNews, "people want their system, however esoteric, to come naturally to them." I have yet to encounter a to-do system that allows for this, but maybe with LLMs something could be possible. There's an idea in there somewhere...

"There is a rumor floating around tech-twitter that Cursor makes just ten cents for every dollar it spends. [...] These companies are beloved on Twitter, raise at billion-dollar valuations and build beautiful user experiences. The approaching bubble pop? They don't own the product and they don't control the supply chain. They live and die by the labs upstream. If OpenAI, Anthropic, and Meta are the chemists, then startups like Cursor, Bolt and Lovable are the dealers." This is a super analogy. When I first started using (and/or abusing) Cursor, I couldn't wrap my head around how much usage I could get from LLM providers, some of which I'd never even visited their official sites and used it to consume their product directly.

"Nobody cares about your blog." When you start writing online, you quickly realize that for the large number of people who use the internet (63%—5.4bn in 2023), it is infinitely large in space (0.001% or ~3,000 domains make up 50% of all web traffic). It can feel like your voice is echoing into the void—but write for yourself, and remember that writing is thinking.

This might not come as a surprise—I really don't want to spoil it for you, and I'm trying so hard not to. But here's James Cameron doing a scientific study to determine whether Jack could have fit on the door with Rose in Titanic.

A fascinating essay from Wendell Berry published in the '80s titled Why I am Not Going to Buy a Computer. His logic shifts from practical concerns about cost and necessity to deeper ethical concerns about the computer replacing his wife and personal typist and editor: "What would a computer cost me? More money, for one thing, than I can afford, and more than I wish to pay to people whom I do not admire. But the cost would not be just monetary. It is well understood that technological innovation always requires the discarding of the "old model" - the "old model" in this case being not just our old Royal standard, but my wife, my critic, my closest reader, my fellow worker" And my favorite line from the essay, Berry discussing how the act of using the computer to write contradicts the very reason he is writing: "I would hate to think that my work as a writer could not be done without a direct dependence on strip-mined coal. How could I write conscientiously against the rape of nature if I were, in the act of writing, implicated in the rape?". Many people today are hesitant to let LLMs handle their creative tasks. Like computers, full adoption seems inevitable. The key issue is if we can hold on to our values as we embrace new tools.

"When you're designing a system, there are lots of different ways users can interact with it or data can flow through it. It can get a bit overwhelming. The trick is to mainly focus on the 'hot paths': the part of the system that is most critically important, and the part of the system that is going to handle the most data." This isn't just good system-design practice—it's fantastic product development. Because the hot path is also the value path. That single, golden workflow is where your product delights (or disappoints) every customer, where latency is felt, where errors cost trust, and where data fuels insight. If you make that path blazingly fast, observably reliable, and ruthlessly simple, everything else around it falls into place.

I really like the idea in this post, but I think it misses something important. Andrew Kelly asks: if renting physical space seems obviously wasteful when you could own, "why is it, then, that people think 'moving to the cloud' is a good idea?"He extends this to AI-assisted programming, calculating that heavy LLM usage could cost $576 to $90,000 per month. His alternative? "My monthly costs as a programmer are $0. I use free software to create free software. I can program on the train, I can program on a plane, I can program when my ISP goes down." Like other programmers, I'm drawn to the local-first philosophy. But Kelly's argument assumes managing your own infrastructure is the highest-value use of your time. For most of us shipping products, that's backwards. I'd rather focus on the product people consume than reinvent deployment pipelines. Companies like Vercel and Cloudflare offer generous free tiers anyway. With AI programming, I agree we should rely on LLMs less, but not stop entirely. The real insight isn't about the cost of renting versus owning—it's about where you choose to spend your finite attention. Sometimes renting is the smarter choice.

As an aside to the above point, I do realise that my machine (MacBook Air M2) is extremely powerful, even for the type of development work I do on it. I wonder if ever we’ll see a company rent individual user’s compute, i.e “let us intelligently reserve 10% of your CPU time whilst your machine is running to train our LLM, and in return we’ll give you a share of our profits”. Would make sense, right?

This is J.R.R. Tolkien's The Hobbit, but Soviet Russia edition. M. Belomlinskij's illustrations are ones that I had never seen before. Brilliant.

There are people whose brains operate at a level of detail that I find slightly disconcerting—but that’s what happens when you study human behaviour like Vanessa Van Edwards does. In her TED Talk, You Are Contagious, she shares “dopamine-prompting questions”—simple swaps for dull small talk that light up reward pathways and invite stories. Instead of “What do you do?” try “What are you working on that you’re excited about?” Instead of “How was your weekend?” try “What was the best part of your weekend?” Instead of “Where are you from?” try “What place feels like home—and why?” Small reframes that can create much better conversations. It feels like mind-tricks sometimes.

Here are two very different approaches to solving Pokémon (a role-playing game) using Artificial Intelligence. The first, Claude Plays Pokémon, hooks Claude LLM up to an emulator with tools available to control the agent in the environment and feeds screen captures back to the agent to iterate. The second approach, Training AI to Play Pokemon with Reinforcement Learning, uses concepts in Reinforcement Learning to simulate thousands of agents playing the game simultaneously, learning through trial and error to optimize for specific rewards like catching Pokémon or winning battles. The Claude approach leverages existing reasoning abilities but struggles with the game's complexity and long-term planning. The RL approach excels at optimization but requires extensive training time and can't easily adapt to new scenarios outside its training environment. The human in the loop also has to cherry pick agents which done well with different parameters versus those that didn't for the next iteration. I can’t help thinking a hybrid works best: let the LLM set goals, shape rewards, and write/modify the RL code; let RL handle low-level control. Loop it—LLM proposes experiments → run sims at scale → distill strategies and rewards → LLM refactors the agent. Human oversight stays, but the system self-improves.

We’re basically at the point where you box an LLM, hand it a tiny brief, and let it rip via simulation. Claudeputer is a very toy-example; the next wave pairs tighter sandboxes with clearer evals, and genuinely valuable outcomes.

Thanks for reading! (。◕‿◕。)